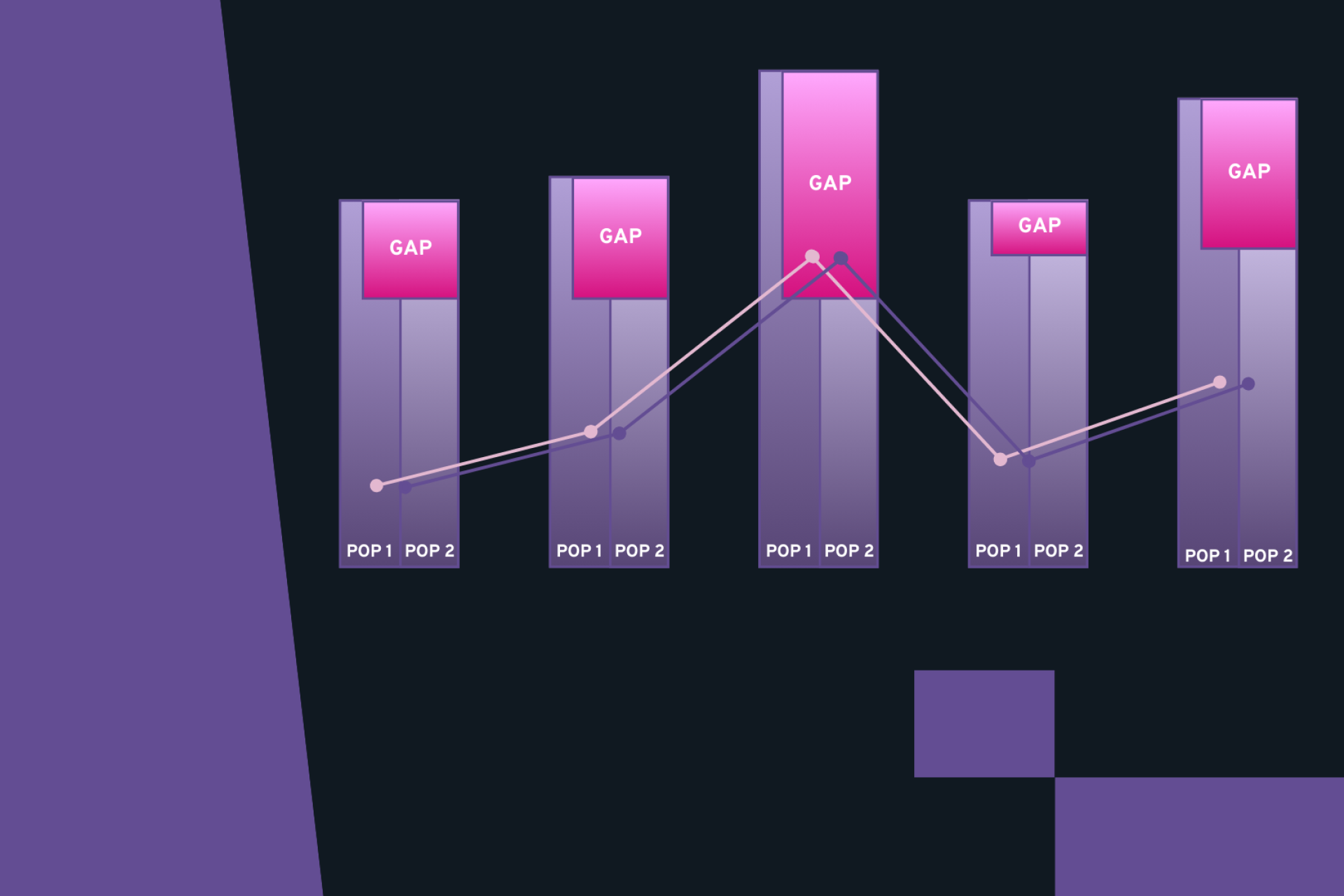

The Student Success Team has built its foundation on research and data.

To this end, in addition to the data used for the Access and Participation Plan (APP), the Data and Evaluation Manager creates numerous reports as well as data packs for subject areas to use. This allows the work within subject areas to be focused on the problems that arise within, rather than just based on the institutional targets.

The aim of these reports is to provide information in an easily digestible manner and incorporates summaries of some of the more technical work that occurs centrally. The Student Success Central Team has found this to be a more engaging method for subject areas by utilising their own data and positioning it against other subject areas within the University. It also encourages a culture of frequent monitoring and examination of trends that allows agility to amend practices when contemporary issues may occur.